Share This Article:

AI 101: Large Language Models and the ABCs of Workers’ Comp

The Trained A-Eye

Sharpen your pencils, grab your notebooks, and settle in because class is officially back in session! And while Drake University started back last week, CLM Claims College is in full swing this week, we have a wonderful new series to share with you to further your education this fall with your favorite professor, Dr. Claire, that’s me!

Welcome to Back to School with AI, a series designed to explore what artificial intelligence really means for workers’ compensation. Over the next twelve articles, we will tackle everything from the basics of prompting to the ethics of machine decision-making, all through the lens of the industry we live and breathe every day.

This project is inspired by the brilliant AI Summer School series created by two of my professor friends at Drake University: Chris Snider and Christopher Porter. These two gentlemen are known as the Innovation Profs and if you are not following them, you should. I learn so much from their weekly newsletters to keep me on the up and up, so I am going to share with you. This summer, THE Innovation Profs broke down the essentials of generative AI for a broad audience, and I could not resist taking their spark and bringing it home to workers’ compensation. Why? Because let’s be honest: our industry does not get a reputation for being at the cutting edge of technology. Still using those green screens? You get it. If there has ever been a moment to pay attention, this is it.

Today, we are starting with the foundation: Large Language Models, or LLMs. Think of this as your “AI 101” and you are at the first day of class. The first day of class is for new markers!! And also, it is where we set the tone, meet the teacher’s pet (ChatGPT, obviously), and talk about how this shiny new tool might help us in claims, compliance, and communication. It may make us better people too… who knew?

What Exactly Is a Large Language Model?

At its core, a large language model is a type of artificial intelligence trained on enormous amounts of text. It learns patterns in language, which words are most likely to come after other words. LLMs learn how words and ideas connect. As a result, the LLM can generate new sentences, paragraphs, or even whole reports that sound remarkably human.

The “large” part simply refers to the massive scale of data and computing power behind it. It reminds me of how insurance is based upon the law of large numbers. Imagine giving a student access to every textbook in the library and letting them practice writing essays a billion times over. By the time finals roll around, that student would be scarily good at predicting what a strong essay should look like. That is what LLMs do with language. They predict what the next words should be based upon patterns that have come before.

The result? Tools like OpenAI’s ChatGPT, Microsoft Copilot, Google Gemini, and Anthropic's Claude. Chat GPT is the most popular LLM, used for everything from email drafts to complex analysis. Chat GPT went from 0 to 100 million users in two months! To give you a good basis for this technological advancement, the cell phone took 16 years to accomplish this user base. Microsoft Copilot you have probably seen as it is built right into Word, Outlook, and excel which helps workers without leaving their daily tools. Google Gemini is Google’s response which is integrated with Google Docs, Gmail, and Sheets. Claude is a quieter but powerful player in the LLM space and has been praised for its clarity and thoughtful responses. Others do exist such as Meta’s LLaMA, xAI’s Grok, and Mitrial; however the big four are the platforms you have more than likely heard of or are seeing in your day-today work right now.

Why Should Workers’ Compensation Care?

Now, I know what you are thinking: “Cool tech, but what does this have to do with my claims desk or return-to-work plan?” Great question! The reality is that LLMs are already reshaping how we work. Please note: LLMs are not here to replace adjusters, nurses, or human resource professionals. LLMs are here to help this space work smarter, not harder by making the administrative, repetitive, or time-consuming parts of our jobs faster and easier… and as a result, we can focus more on people. And PEOPLE are at the heart of what we do in workers’ compensation.

Think of LLMs as the ultimate teaching assistant in your work comp “classroom.” These LLMs do not take the test for you, but they help prep the study guides, grade the practice quizzes, and free up your time to teach. This makes you more effective and efficient by providing you the tools and resources to do more with the time you have. Intentionality is key! If you’ve ever wished for a clone who could handle your paperwork while you focused on the human side of this job, LLMs are as close as it gets. Here are some ways LLMs can show up to help YOU.

Claims Communications. Drafting clear, empathetic letters to injured workers instead of reusing cold, legal-heavy templates. LLMs can help you make this information much more consumable for your audience. Statues are hard to understand, break it down! And if you are not sure how, ahh, there is an LLM who can help you phrase it so a fifth grader could understand. (Ideal communication should be between sixth to eighth, in case you are wondering on consumablility!)

Training & Education. Creating supervisor handouts, safety reminders, or FAQ sheets tailored to specific industries. I don’t know about you, but I am not a spur of the moment creative, and this is where LLMs can help you be more inspiring through visual communication to help emphasize educational points or reminders.

Fraud Detection Support. Spotting unusual language patterns across reports that might flag inconsistencies. Words and verbiage choice matter. People are trained to detect language nuances and if you are not one of those trained professionals, LLMs can help you along the way, assisting you to work smarter, not harder.

Policy & Procedure Writing. Turning complex regulation updates into plain-language explanations for teams. Sometimes we think what we are communicating makes sense, and it does, to us, the drafter of the communication. LLMs can help with how written communication can be received. This is like having a personal editor always within reach.

Working with People. People are hard. We are multi-faceted, multi-functional, multi-dimensional, multi-emotional beings. Two things can be true for any one of us at a given time. With this complexity, sometimes we have no idea how to handle a situation and LLMs can offer suggestions on what to say next, how to approach a situation, or when to simply be quiet and say nothing. (Yes, I have had it tell me this before. If you don’t know what to say in a situation, maybe nothing is the answer for this moment. And. My LLM was right.)

Picking Your Platform: The Big Four

Like choosing a calculator for math class, the LLM you use depends on what you need. The truth? You do not have to master them all. Start with one that integrates with your existing tools. The learning curve is less steep than you think, and most people are amazed by how quickly they get comfortable once they start experimenting! ChatGPT is flexible, intuitive, and great for brainstorming, drafting, and role-play exercises. (No one likes doing those, so I prefer to call those simulations.) Microsoft Copilot is basically a tutor sitting right in the margin of your spreadsheets and works well for people already living in Excel or Outlook. If you are old enough to remember, Clippy was present WAY back in the day with Word where the avatar could help you start a new document or share a resume template. Google Gemini seamlessly fits into organizations running on Gmail or utilizing Google Docs. Claude stands out for summarizing long, complex documents with accuracy. Which one to use? Try one and see how you feel.

How Do LLMs Work? (Without the Boring Math)

We could dive into “transformers” and “neural nets,” but this is workers’ comp school, not computer science class and therefore, I will leave this with the Innovation Profs for further explanation there. What you need to know is that LLMs don’t “think” the way humans do. They predict the next likely word or phrase based on patterns. As predictors, LLMs do not know truth from fiction as they function around knowing probability. (This is why fact-checking their work is critical!) The more you interact with a LLM, the better they get. Prompting, the way you ask a question, shapes the quality of the answer you will get from your LLM. Think of it like teaching a brand-new adjuster. If you say, “Handle this claim,” they will stumble until they figure it out. If you give step-by-step guidance such as “Review medical notes, summarize treatment, draft a letter”, the new adjuster has a better chance to succeed. LLMs need that same clarity.

The Human Factor

The biggest misconception about AI in workers’ comp is fear of replacement. Adjusters, HR professionals, risk managers, providers, safety professionals ... we are not being erased! Please note that what is being erased is the busywork. LLMs can pull data and draft text, but they cannot sit across from an injured worker, hear the tremble in their voice, and know when to soften the conversation. They cannot walk a supervisor through a tough return-to-work decision with empathy. They cannot see the bigger cultural context of a workplace. LLMs build from language patters. We grow through experiences with people. That is where we shine. AI doesn’t eliminate the need for the human element: it highlights it, improves it, and shows us we CAN be better… we simply need to make the CHOICE to do so.

Class Takeaway

Large language models are the ABCs of the AI world. LLMs free us to do what matters most: care for people, support recovery, and connect with empathy, not about making workers’ comp colder or more robotic. As we head into this school year together, I challenge you to think: Where could an LLM give me back 10 minutes today? An hour this week? A day this month? That’s time you can spend where it matters, with your people. For me? Chat GPT and I are going to be in the kitchen as I finally learn to cook… maybe. Or it can help me organize my schedule with time blocking so I can get that down to a science! That is all for now.

Class dismissed.

Show & Tell: Multimedia Tools for Training & Injured Worker Support

The Trained A-Eye

Pack your art supplies and get ready for Show & Tell, because this week in Back to School with AI we are diving into the colorful, creative side of artificial intelligence. Last week in AI 101 we met the teacher’s pet, Large Language Models, and learned how they can help us with the ABCs of workers’ comp. This week, we are moving beyond words on a page to more visual communication. AI also includes images, audio, and video that are transforming how we learn and communicate well beyond text alone. This shift opens new opportunities for workers’ compensation professionals to connect in more engaging and human ways. Most of us are visual learners so we should communicate visually a well!

This project is inspired by my professor friends Chris Snider and Christopher Porter, known as the Innovation Profs, who created the AI Summer School series. Their lessons sparked me to ask: how do these multimedia tools fit within the workers’ compensation industry? The answer is exciting and colorful so you know I jumped right in! Multimedia, while used heavily in advertising and for entertainment, can be effectively used to train employees, communicate with injured workers, and build stronger workplace cultures. By translating these tools into our workers’ compensation world, we are adding fresh crayons to our communication box.

Picture Day! AI-Generated Images in Workers’ Compensation

Picture day at school was always a mix of nerves and forced smiles for me. In today’s workplace we have a new kind of picture day thanks to AI-generated image tools. These platforms allow us to create professional, tailored visuals on demand, which is a game changer for those of us who usually rely on stock photography. With tools such as Midjourney, Ideogram, and Google Imagen, safety posters, ergonomic illustrations, and training visuals can be designed quickly and customized to specific workforces. This saves time, reduces costs, and ensures that the message is visually aligned with the audience. By making image creation accessible, AI is giving us the ability to design with purpose and clarity.

The potential of AI images does come with challenges. Consistency of style can sometimes be an issue, and misspelled text occasionally appears in graphics. There are also important questions about copyright and intellectual property ownership when these tools generate outputs. Despite these concerns, the advantages often outweigh the drawbacks. Instead of spending hours hunting for the right photo, we can now produce meaningful and relevant visuals that help workers understand critical safety messages. This ability to communicate visually can bridge literacy gaps and make training more effective across diverse employee populations.

Music Class: AI Audio for Human Connection

If picture day captures the visuals, then music class brings us to the sound of AI-generated audio. Audio tools have advanced dramatically, and now text-to-speech voices sound almost indistinguishable from human voices. ElevenLabs is a leader in this field, offering customizable tone, pacing, and emotion in its generated voices. For workers’ comp, this means we can create multilingual safety briefings, empathetic audio explanations of return-to-work policies, or even guided stress-reduction exercises for injured workers. Having information available in audio form provides accessibility for those who may not connect well with written text. This adds another layer of support in an already complex recovery process.

Another exciting development in audio is the ease of editing. Descript, for example, makes editing audio as simple as editing a Word document. This innovation allows trainers and communicators to refine materials without needing advanced technical skills. For claims professionals or HR teams, this means less time wrestling with technology and more time focusing on meaningful communication. The presence of human-like audio gives workers’ compensation professionals a new tool to build empathy and clarity into their interactions. By hearing a kind and supportive voice, injured workers may feel more reassured and connected during uncertain times.

Film Club: AI-Generated Video in Training & Communication

If visuals and audio are evolving, then the film club of AI takes us to the most dynamic change: video. AI-generated video has moved from experimental to practical, offering the ability to create professional-quality clips with minimal resources. Tools such as Runway, Pika, Sora, and Veo can generate video from text prompts or transform static images into dynamic video content. In workers’ comp, this could mean creating short training videos that demonstrate safe lifting techniques, producing explainer videos to help injured workers understand the claims process, or developing scenario-based clips that guide supervisors through difficult return-to-work conversations. The ability to produce tailored video content in minutes, rather than weeks, is revolutionary.

Platforms such as HeyGen and Synthesia even allow for avatar-based videos. Instead of hiring actors, organizations can use avatars to deliver scripts in a professional and approachable way. This makes it possible for smaller companies with limited resources to create polished training and communication materials. Of course, deepfake concerns and ethical considerations must be addressed, but the opportunities to provide clear, culturally relevant, and accessible content outweigh the risks when implemented responsibly. Video captures attention like no other medium, and with AI, it is finally within reach for everyone in our industry.

Why Multimedia Matters in Workers’ Compensation

The reason multimedia matters so much in workers’ compensation is because people learn in different ways. It is up to us as the work comp professionals to communicate in a manner that is consumable for our audience whether that be employees from a safety perspective or injured workers’ going through their claims experience. Some people absorb information best through reading, others through listening, and many through seeing examples in action. Visual communication is imperative! By adding multimedia to our toolbox, we create opportunities to meet employees, supervisors, and injured workers where they are. An injured worker overwhelmed by paperwork may benefit from a simple video walkthrough. A supervisor with a packed schedule may prefer listening to a podcast-style update during a commute. Safety professionals can enhance toolbox talks with AI-generated images that spark discussion and engagement.

Personalization through multimedia creates a pathway to building trust. Just as with LLMs, it is important to remember that these tools do not replace people. These tools amplify the human element. A video can never replace an empathetic conversation with an injured worker. A voiceover cannot take the place of a compassionate nurse case manager. What multimedia tools can do is remove barriers to understanding and communication Having multiple ways to communicate with a multilingual workforce is a game changer. Multimedia tools can provide access in multiple languages, simplify complex processes, and deliver consistent messaging. By making content clearer and more engaging, these tools free up workers’ comp professionals to focus on empathy, strategy, and connection.

Class Takeaway

The class takeaway this week is simple: multimedia AI tools are no longer novelties. They are becoming everyday creative companions. For workers’ compensation, these tools provide the opportunity to communicate with greater clarity, improve training outcomes, and create human-centered support materials. The homework for all of us is to examine one communication piece we use often and ask whether it would be more effective if it included visuals, audio, or video. Need some examples? Think a claims packet, return-to-work policy, or safety reminder for starters. By experimenting with multimedia, we can find new ways to make information stick and keep people engaged. When we use AI tools to connect, we make the human side of workers’ compensation even stronger.

Class dismissed.

English Class: The Art of Prompting in Claims & Risk Management

The Trained A-Eye

Back to School with Prompts – with Dr. Claire Muselman

Welcome back to class! In English, we learned that the words we choose matter. They carry tone, shape meaning, and influence how others respond. The same holds true in the world of artificial intelligence, where prompting determines the quality of the results. Prompting is the way we phrase our requests to AI tools. The words we choose to use with AI matter. For workers’ compensation, where communication can make or break trust, prompting is more than a tech skill. Words, phrasing, and prompting are literacy we all need to master.

This project is inspired by my professor friends Chris Snider and Christopher Porter, known as the Innovation Profs, who created the AI Summer School series. Their exploration of prompting made me wonder how this skill translates into workers’ compensation, where communication is at the heart of everything we do. The connection is powerful because prompting is essentially about clarity, tone, and purpose which are the same ingredients that shape great claims notes, supportive return-to-work letters, and policies people can understand! Ironic, don’t you think? By bringing this framework into our space, we are sharpening our pencils and learning how to write with intention, both for AI and for the people we serve.

Defining Prompting in Our World

Prompting can be defined as the process of guiding AI to generate relevant, accurate, and useful results. Think of it like drafting a claims note: if you write “employee hurt at work,” you are not saying much. You have barely scratched the surface. We need clarity and specificity versus vague language and jargon. In contrast, if you write “employee slipped in the warehouse on wet flooring, sustaining a left ankle sprain, light duty requested,” you have now provided context that helps the next reader act quickly and appropriately. AI functions in the same way. The clearer the input, the more valuable the output.

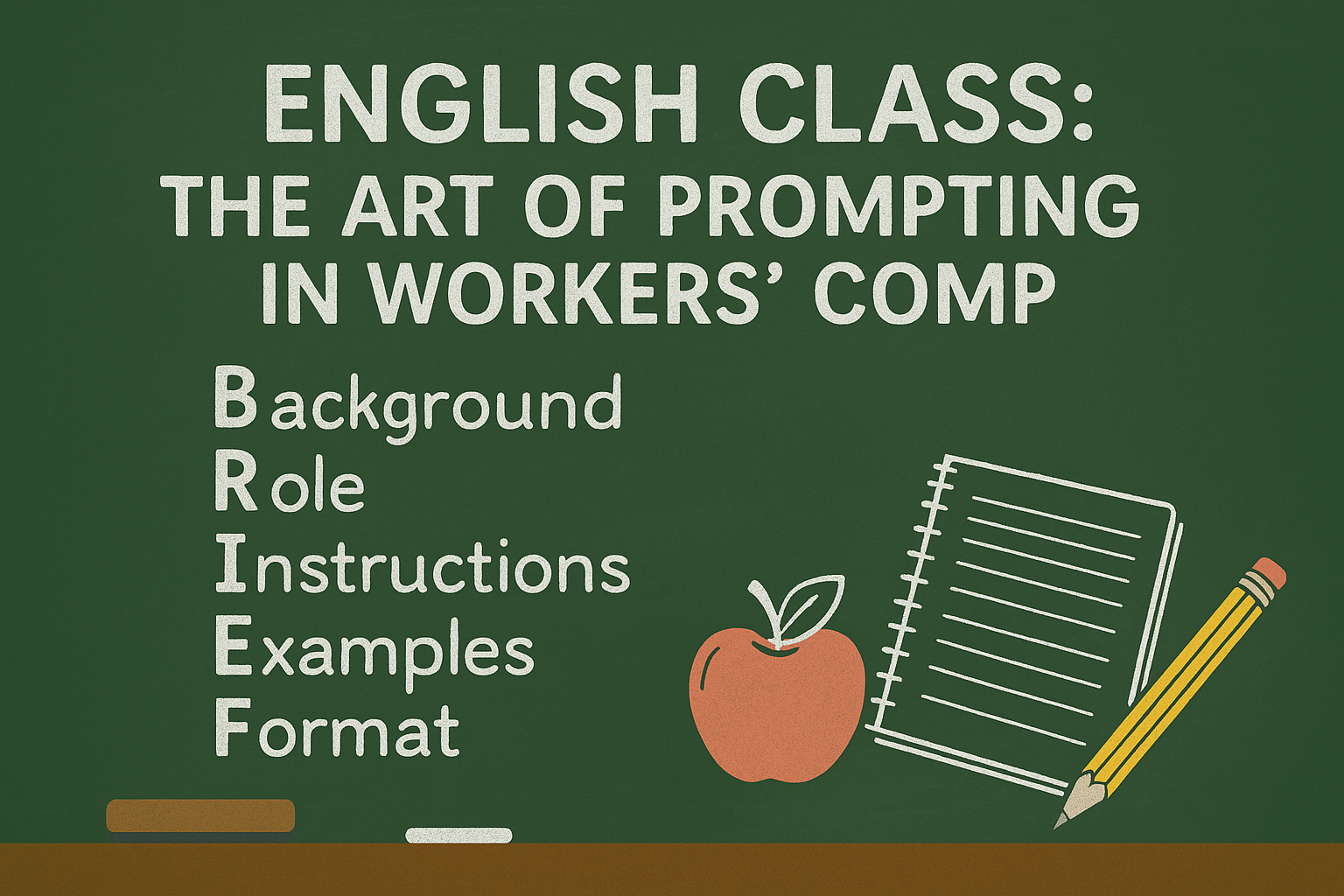

The BRIEF Framework

The Innovation Profs shared a simple yet powerful framework called BRIEF. BRIEF is a prompting framework which stands for background, role, instructions, examples, and format. Inspired by the Innovation Prof’s AI Summer School, we can bring BRIEF into our workers’ comp classroom. This approach helps us remember the five elements that turn a vague question into a strong prompt. Think of it as the grammar of AI, giving structure to our digital conversations.

B = Background

Background is where everything starts. Just as a claim file is incomplete without the injury details, jurisdiction, and employer information, a prompt is incomplete without context. If you tell AI, “Write a letter,” the response will be generic and impersonal. But if you provide background to create context “Write a letter to an injured worker at a Midwest manufacturing company in Iowa who sprained their back, explain next steps, and emphasize empathy”, suddenly the AI has the framework to build something useful. Context is the difference between a half-filled form and a detailed, actionable claim.

R = Role

Role is equally important. In workers’ compensation, we rely on specialized perspectives. A nurse case manager communicates differently than a risk manager, and a claims adjuster has a different tone than an HR trainer or one of our friends from the finance department. When you tell AI what role to assume, you are shaping its voice and lens. Where you stand says a lot about what you see and defining a role can shape this perspective. If you say, “Act as a workers’ comp adjuster writing to an injured worker,” you will get something more tailored than if you simply ask, “Write me a letter.” Defining roles ensures that tone, expertise, and focus are aligned with your audience.

I = Instructions

Instructions are the meat of the prompt. Workers’ compensation professionals know the value of clarity. Imagine a supervisor telling an employee, “Come back to work soon.” Compare that with, “Report to the warehouse Monday at 9 a.m. for modified duty, with lifting limited to ten pounds.” Which sets expectations better? AI thrives on specificity. “Summarize this statute in three sentences highlighting expectations and parameters for the claims adjudication process so a fifth grader could understand” will produce something far more actionable and readable than “Summarize this.” Clear instructions reduce trial and error and make AI a true collaborator. Plus, AI can break concepts down for easy consumption and in workers’ compensation, we are trying to make things consumable for different audiences. Simplicity and specificity coupled with brevity are where the magic happens!

E = Examples

Examples strengthen prompts by giving AI a model to follow. Think about how we train new adjusters: we show them examples of strong letters and then ask the adjuster to create their own version of a letter stating a claim has been found non-compensable. AI learns in the same way. If you paste a sample communication and say, “Model the style and tone of this letter,” you will get results closer to your organization’s voice. (Think mission, vision, values… this should come across in ALL aspects of communication.) Examples are the bridge between theory and practice. They tell the AI, “This is what good looks like.”

F = Format

Format is the final step, and it closes the loop. In claims, we communicate in a vast arena. We write diary notes, policy summaries, letters, or flash. notices each with its own format. AI needs the same direction. If you tell it to “Draft a table of restrictions” or “Write a short paragraph in plain language,” you will get content that fits your purpose. Without format, you may receive something accurate but unusable. By shaping the container for the content, you make AI an efficient partner.

A Workers’ Comp Example

To see BRIEF in action, let us walk through a work compensation example together. Imagine you need to communicate modified duty to an injured worker. Your prompt might look like this: “Background: A manufacturing employee has sprained their back and is worried about returning too soon. Role: You are a claims adjuster writing in a supportive and empathetic tone. Instructions: Explain modified duty, emphasize that it supports healing, and invite the worker to reach out with questions. Examples: Here is a supportive line we have used before: ‘We are here to make sure you heal while staying connected to the workplace.’ Format: Draft a short letter, no more than 150 words.” With this prompt, AI is far more likely to produce a letter that reassures the worker, supports recovery, and reflects the values of your organization.

Please note: Be aware of what you are putting into the prompts and please abide by your organization’s AI philosophies, policies, and parameters. As you can see in the example, no personal information has been provided. You can achieve positive results without giving away unnecessary information.

Why Prompting Matters

The beauty of BRIEF is that it is flexible. You do not always need all five elements, but the more information you provide, the better the response. Sometimes Background and Role are enough. Other times, Format becomes the deciding factor. The framework is a memory tool, reminding us that good communication is intentional, thoughtful, and structured regardless of if being complied by a person or a machine. It is about clarity.

Prompting as a Time Saver

Strong prompting makes the most of your time and focus. Work smarter, not harder! Workers’ compensation professionals spend countless hours drafting letters, summarizing notes, and rewriting policy updates, let alone figuring out how to communicate with a variety of different people for their audiences. With stronger prompts, AI can handle the heavy lifting of first drafts, freeing us to refine and personalize. If AI can support work on this front, the human side can spend more time listening to injured workers, more time strategizing return-to-work programs, and more time building healthier workplace cultures. Prompting becomes a skill and a way to elevate the human side of our work.

Class Takeaway

English class always ended with an essay, and here is ours: prompting is the literacy of AI. The words we choose shape the quality of our results, both in technology and in people. Just as clear claims notes lead to better outcomes, clear prompts lead to better AI collaboration. For your homework, I challenge you to take one piece of your own writing and reframe it as a BRIEF prompt. Try using a standard claims letter or a safety reminder. See what AI gives back. Then ask yourself: how can this tool give me back ten minutes today, an hour this week, or even a day this month? The more we practice, the more fluent we become. Again, check with your organization with their philosophies, policies, and procedures to stay in alignment.

Class dismissed.

Library Day: Building a Prompt Library for Adjusters & Employers

The Trained A-Eye

Welcome back, classmates! If last week’s English lesson taught us the art of crafting strong prompts, then today we head to the library, my favorite place in school! The library has always been a place of organization, inspiration, and discovery. In the world of artificial intelligence, that is exactly what a prompt library can be. We define a prompt library as a curated collection of prompts that saves us time, fuels creativity, and ensures consistency across the way we use AI. For workers’ compensation professionals, this could become one of the most powerful tools in our communication and claims management toolbox.

This project is inspired by my professor friends Chris Snider and Christopher Porter, known as the Innovation Profs, who created the AI Summer School series. Their exploration of prompting sparked me to see how this concept could live in the workers’ comp space. Their lessons lit up my imagination and reminded me that while prompts can be tested, revised, and polished individually, their true power emerges when they are collected, shared, and reused. A prompt library transforms isolated brilliance into a strategic asset that can benefit teams, organizations, and even our entire industry.

What Is a Prompt Library?

A prompt library is simply a curated collection of prompts including but not limited to questions, statements, or instructions designed to guide AI into producing meaningful results. Think of it as a recipe book for AI interactions. Instead of guessing every time you step into the kitchen, you pull a tried-and-true recipe off the shelf and know you will get something delicious. In workers’ compensation, this means instead of writing every letter, claim summary, or policy explanation from scratch, you could select a reliable prompt that already produces the tone, clarity, and structure you need. A well-built library turns prompting from an individual skill into a collective resource.

Why Build a Prompt Library?

The benefits of a prompt library are clear, especially for an industry like ours where consistency and accuracy are vital. First, prompt libraries create uniformity across communication. When injured workers, supervisors, and HR teams receive messages built from consistent prompts, the quality of the experience improves. Second, libraries save time. No more starting from scratch with every request; prompts that already work can be reused, saving hours of effort across a team. Third, libraries spark creativity. Seeing how a colleague structured a prompt can inspire new ways to approach training, communication, or compliance. Finally, libraries support collaboration. When adjusters, risk managers, or HR professionals share their best prompts, the collective wisdom grows stronger.

In workers’ compensation, where multiple people often touch a claim, collaboration is key. A shared library ensures that the empathy of a nurse case manager, the detail of a claims adjuster, and the compliance focus of a risk manager can all live side by side. Together, these voices create consistency, clarity, and compassion.

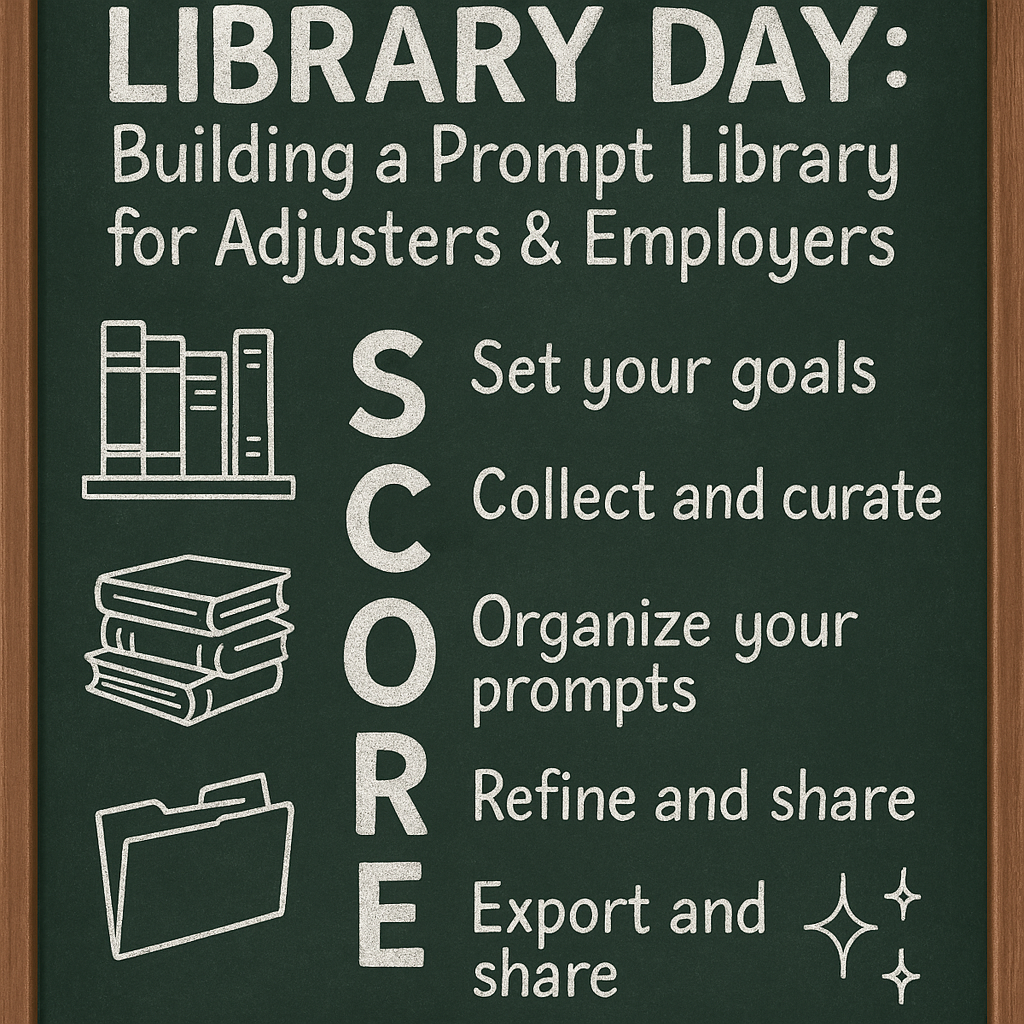

The SCORE Framework for Building a Prompt Library

Just as BRIEF gave us a simple way to think about crafting prompts, SCORE provides a roadmap for building and maintaining a prompt library. Let’s work through SCORE together in the context of workers’ compensation.

S - Set Your Goals

Every library has a purpose, and a prompt library is no different. For workers’ comp, your goal might be to improve injured worker communication, streamline return-to-work processes, or enhance internal training. Maybe your team wants faster first drafts of claims notes or more accessible explanations of state statutes. By setting your goals clearly, you give your library direction. Without goals, a library is just a messy stack of books. With goals, it becomes a resource that serves your mission.

C - Collect and Curate Prompts

The next step is to gather useful prompts. These can come from your own practice, your team’s best work, or even external sources that you adapt to your industry. You can also ask AI itself to help brainstorm prompts, testing them until they fit your needs. In workers’ comp, prompts could include letters explaining modified duty, training materials for supervisors, safety reminders, or claims summaries. The key is to curate by keeping the prompts that actually work and discard the ones that confuse or complicate. Quality matters more than quantity.

O - Organize Your Prompts

A library is only useful if you can find the book you need, and the same applies to prompts. Organize them by categories that make sense for your workflow: injured worker communication, supervisor resources, claims notes, compliance updates, or training scripts. Within each category, prompts can be labeled for tone, complexity, or intended audience. An organized library reduces friction and makes it easy for any team member to pull the right tool at the right time.

R - Refine Your Prompts

No prompt is perfect on the first try, just as no claims process is flawless the first time you design it. Refinement is the heartbeat of a strong library. Test your prompts, review the results, and adjust them until they reliably deliver what you need. In workers’ comp, that may mean revising prompts until they produce communications that are empathetic yet compliant, thorough yet clear. Refinement ensures that the library stays alive and continues to evolve as needs and technologies shift.

E - Export and Share Your Library

A library is meant to be shared. Whether in a shared Word document, a spreadsheet, or a team platform, exporting your library makes it accessible to everyone who needs it. When a claims team, HR department, or risk management unit all pull from the same library, they build consistency across the organization. For larger employers or carriers, this could extend to vendor partners, ensuring that every stakeholder communicates with the same voice and clarity. A shared library saves time and strengthens culture by aligning communication across the board.

A Workers’ Comp Example of a Prompt Library

Imagine building a prompt library for return-to-work. Under one category, you might have prompts that draft empathetic letters to injured workers explaining modified duty. Another category could include prompts that coach supervisors on having supportive conversations with employees returning after an absence. A third category might offer prompts that create quick handouts or FAQ sheets about transitional assignments. This collection becomes a library that saves hours of writing time and ensures that every communication feels consistent, compassionate, and clear.

Why This Matters for Our Industry

Workers’ compensation thrives on trust, and trust is built on communication. A prompt library is an improvement in efficiency because saving time is a gift. A prompt library also raises the standard of communication across our industry. Injured workers deserve clarity. Supervisors deserve confidence. Teams deserve resources that support them in doing their jobs well. By embracing prompt libraries, we take a step toward a future where every letter, every summary, and every training resource reflects the best of who we are.

Class Takeaway

A well-crafted prompt is a powerful tool, but a library of prompts transforms power into strategy. By applying SCORE, workers’ compensation professionals can build resources that ensure consistency, save time, spark creativity, and encourage collaboration. Just like the library card we treasured as kids, and still do as adults for Libby, a prompt library gives us access to worlds of possibility. Like any good library, its value grows the more we use it.

Here is your homework: start your own prompt library. Collect three prompts you have tested, organize them in a simple document, and share them with one colleague. See what happens when your library grows. The future of workers’ compensation communication may very well be written one prompt at a time.

Class dismissed.

Science Lab: Custom GPTs for Workers’ Comp Programs

The Trained A-Eye

Welcome back, classmates! Last week we spent our time in the library, learning how to organize prompts into a prompt library using the SCORE framework. Libraries make our lives easier because they keep resources consistent, efficient, and shareable. This is great and also…what if you find yourself using the same cluster of prompts over and over again, each time adding the same background information? It’s time to get more efficient. Welcome to science lab, where we experiment, innovate, and push the boundaries. Today our experiment: building custom GPTs tailored for workers’ compensation.

This project is inspired by my professor friends Chris Snider and Christopher Porter, the Innovation Profs, who created the AI Summer School series. Their work on prompt libraries and custom GPTs showed me how powerful these tools could be, and I adopted it for the world of workers’ compensation. Our industry is built on repetition and consistency including claims letters, policy explanations, RTW instructions. Custom GPTs allow us to automate the background noise so we can focus on the human impact.

What Is a Custom GPT?

A custom GPT is a personalized version of ChatGPT that is tailored for a specific purpose. You package background information, roles, examples, and formatting into the GPT itself, so you do not have to repeat them every time. Imagine it as a lab experiment where you pre-mix all the chemicals so you do not have to measure ingredients for every test. You are coming to the table with the formula bottled and ready. For workers’ comp professionals, this means having a GPT that already understands your tone, jurisdiction, and communication style, making your daily work much smoother and faster. If you service multiple jurisdictions, you can make multiple custom GPTs.

Why Custom GPTs Matter in Workers’ Comp

Workers’ comp thrives on repeatable processes that must still be carried out with care. Enter the human elements we so direly need. Adjusters draft dozens of similar letters each week, HR managers explain modified duty policies over and over, and nurse case managers repeat treatment guidelines across multiple cases. A custom GPT bakes the background into the system, so each request starts from a place of shared understanding and reduces the chance of inconsistency. This can remove friction that drains energy and wastes time. When you streamline repetitive communication, you reclaim hours that can be redirected into empathy, listening, and problem-solving.

Custom GPTs raise the standard for consistency across organizations. Instead of telling AI over and over, “Act as a claims adjuster writing to an injured worker, keep it empathetic, use plain language, aim for 150 words,” you build a custom GPT that takes those assumptions for granted. Now you can simply say: “Write a modified duty explanation for a warehouse worker with a sprained ankle.” The GPT delivers in the tone, format, and length you want, without you needing to spell it out. This shift ensures that every worker receives communication aligned with organizational values, not just the adjuster’s individual style that day. Over time, this builds stronger trust with injured workers and demonstrates professionalism across the board. Consistency and repeatability are key. Custom GPTs can help.

Building Blocks: From BRIEF to Custom GPTs

Remember the BRIEF framework? Background, Role, Instructions, Examples, Format. A custom GPT is like bottling BRIEF into a single reusable container so you do not have to rewrite the same details. The GPT “remembers” the Background, Role, Examples, and Format under the hood, leaving you free to only provide the Instructions each time. Think about a claims diary note. Instead of writing, “Background: Manufacturing company in Iowa. Role: Claims examiner. Examples: Use empathetic language. Format: Short paragraph for diary note,” every single time, your custom GPT already knows all of that. Remember to be mindful about what information you are putting into your custom GPT. You can do all of this without entering personal protective information. Work smarter… not harder. Custom GPTs essentially automate the setup, so you can jump straight into the work that matters.

Uploading Documents: Adding Context at Scale

One of the most powerful features of custom GPTs is the ability to upload documents. Imagine feeding your claims manual, return-to-work policy, or state-specific statutory language into the GPT. Instead of flipping through PDFs or searching intranet pages, you can ask, “What are the restrictions for temporary total disability in Iowa?” and get an answer based on your uploaded resources. This is a major time-saver, but it also reduces the risk of misinterpretation when staff are unfamiliar with a particular state’s rules. With accurate references built right into the GPT, new team members get up to speed faster and seasoned professionals stay consistent.

A Workers’ Comp Example of a Custom GPT

Want to build a Return-to-Work Assistant GPT? I know I do. What you can do is upload your company’s RTW policy and feed it sample letters you have used in the past. (Make sure to react any personal information.) You configure the GPT so that it always writes in plain, supportive language, frames modified duty as a healing support, produces letters under 200 words, and suggests talking points for supervisors. When someone types: “Draft a modified duty offer for an injured worker with lifting restrictions after a back sprain,” the GPT already knows the policy, the tone, and the structure. The result is consistent and empathetic communication, without requiring to rewrite the same background every time.

How to Build One (Without the Scary Tech Talk)

The good news is that building a custom GPT is easier than it sounds. OpenAI provides a GPT Builder that walks you through the process in plain language, like a lab partner explaining each step. You answer a series of questions: what should the GPT know, how should it behave, and what resources should it draw from? You can test your GPT as you build it, refining until it works the way you want. Paid ChatGPT users can create GPTs, but once built, they can be shared with free users as well. This means your investment benefits your entire team, regardless of who has a subscription.

Other platforms are developing similar tools, which shows how valuable this concept has become. Microsoft Copilot offers “Agents” for paid users, and Google Gemini provides “Gems,” which can be built even by unpaid users. These features allow professionals across industries to build assistants tailored to their needs. For workers’ compensation, we can experiment across platforms and identify which tool integrates best with our systems. The landscape is evolving quickly, and those who test now will be best positioned to lead later.

Class Takeaway

Custom GPTs are the next step in our AI journey. They evolve naturally from the prompt libraries we explored last week, simplifying repeated tasks, embedding context, and creating consistency across communication. In workers’ compensation, they are cultural tool that helps us care for people while protecting process. The real magic lies in how these tools save time while elevating the quality of human connection in claims management. By leaning into innovation, we shape the future of this industry with intention and heart.

Here’s your homework: think of one area of your work where you repeat the same prompts weekly. Is it claims letters? Policy summaries? Supervisor coaching scripts? Imagine how much time you could reclaim if you had a custom GPT already programmed with your tone, format, and examples. Then, take a bold step: build one. Even if it might be small, the act of experimenting is the first step toward transforming how we work. The lab is open, the tools are here, and the future of workers’ compensation is waiting for us to step in.

Class dismissed.

Math Class: Reasoning Models for Real-World Decisions in Workers’ Compensation

The Trained A-Eye

Welcome back to class, friends! Sharpen those pencils and grab your calculators. Today we are heading into Math Class. In workers’ compensation, so much of our world is built on logic, planning, and pattern recognition. From setting reserves to structuring return-to-work plans, success comes down to how we connect data and human insight. This week, we are talking about reasoning models, the next evolution in AI tools designed to “think” before they speak. These models do more than identify patterns. They pause, process, and provide thoughtful, step-by-step conclusions, the kind of disciplined thinking we love in our industry!

This project continues to be inspired by my professor friends Chris Snider and Christopher Porter, the Innovation Profs, who created the AI Summer School series. Their exploration of reasoning models immediately caught my attention because it speaks to how we make decisions in workers’ compensation every day. Our industry depends on critical thinking, accuracy, and accountability. Translating the Innovation Profs work into our world gives us an opportunity to explore how reasoning models can help us strengthen both the analytical and the human sides of our work. This is where logic meets empathy. Plus, a little sparkle never hurts!

What Are Reasoning Models?

Reasoning models are a newer generation of large language models that go beyond predicting the next word. Traditional models focus on the speed of getting to the “answer” as fast as possible. Reasoning models, on the other hand, slow down and plan before they respond. They generate an internal chain of thought, check their logic, and then deliver a concise, accurate response. Reasoning models act like a thoughtful colleague who sketches ideas on the whiteboard, talks through the logic, and then summarizes the best answer for everyone to follow.

Reasoning models such as OpenAI’s o-series, Anthropic’s Claude 4, and Google’s Gemini Pro represent the most advanced versions available right now. These models are designed to handle tasks that require structured reasoning such as math, law, financial forecasting, and complex decision-making. For workers’ compensation, this means reasoning models can analyze claims data, forecast cost exposure, or help structure return-to-work timelines in ways that are both detailed and digestible. Think of it like having an analytical partner who does not get overwhelmed by the paperwork.

Why Reasoning Models Matter in Workers’ Compensation

Our work in workers’ compensation is rarely simple. Decisions require layers of logic as this space operates with policy language, jurisdictional nuances, medical details, human factors, and budget implications. Reasoning models thrive in these multi-step environments like the world of workers’ compensation. These models can follow the full path from data to decision, considering each factor instead of jumping to conclusions by organizing the chaos. By offloading the mental heavy lifting, reasoning models give us more space to focus on empathy, strategy, and the human connections that drive recovery.

How Reasoning Models Work

When we ask reasoning models a question, an internal “chain of thought” is created. You might see a note like “thinking for 40 seconds” before the answer appears. This is the model mapping out its logic. The result is an explanation of how the model got there in addition to the answer. That transparency helps us check the reasoning, spot assumptions, and refine prompts for even better results.

For example, if you would ask, “Based on these restrictions and wage details, estimate temporary total disability exposure for the next six months,” a reasoning model will not guess. The model will average recovery durations, modified-duty options, wage replacement calculations, and potential medical interventions. The reasoning model will then summarize the logic, showing how each factor contributed to the estimate. This step-by-step approach mirrors the way seasoned claims adjusters think, only faster.

Real-World Applications in Workers’ Comp

Think about reserves. Setting reserves is where reasoning models can make a real difference. If you input injury details, jurisdiction, and historical claim data, the model can produce projected medical, indemnity, and expense reserves with justification for each category. It can compare optimistic, expected, and conservative outcomes and flag the conditions that might trigger a change. This helps new adjusters learn faster and ensures consistency across teams.

In return-to-work planning, reasoning models can develop phased schedules that align medical restrictions with safe job tasks. How many times have you heard an employer or leader say they have no modified duty options available? Turn to the reasoning model. These models can generate scripts for supervisors, communication plans for employees, and checklists to track progress. These models can also integrate ADA considerations and highlight milestones for reevaluation. When someone is juggling multiple cases, having a structured, logic-based assistant keeps everyone aligned.

Even in policy interpretation or compliance work, reasoning models shine. You can paste statute excerpts or policy language into the model and ask for a plain-language summary of what is required versus what is recommended. You can then ask for a version tailored to supervisors, employees, or executives, and even ask the model to break it down like you are a fifth grader. The model can even generate documentation checklists, turning abstract rules into actionable steps. Action is power!

Tips for Getting Better Results

Using reasoning models effectively requires intentional prompting. Start by asking the model to “walk through its thinking step by step before deciding.” This type of prompting encourages reasoning that separates strong results from generic ones. As the model to “compare two or three possible approaches” before recommending one, which can help uncover creative options that may have not been considered. You can also ask the reasoning model to cite its sources, which if you are like me, I want to know where the information is coming from.

Ideally, you want to educate the reasoning models to respond in a manner that is accurate and manageable. If you are brainstorming solutions, a prompt such as “Give me three very different options” expands the conversation. When you need real-world context, specify it: “Use examples from workers’ compensation data” or “Frame this for a self-insured employer.” The more context you provide, the more usable the output becomes.

Keeping the Human in the Loop

As powerful as reasoning models are, remember that they are tools. These tools are not replacements for professional judgment. As the human behind the tool, we must still verify outputs, maintain data privacy, and use discernment before acting on recommendations. These models do not hold claims adjusting licenses or carry empathy. Reasoning models are her to enhance and support what humans already bring to the table. The goal is to amplify your expertise. These models handle the structure so we can handle the soul. When we combine AI’s logic with human empathy, programs become smarter, faster, and more compassionate.

Class Takeaway

The disciplined thinkers of the AI classroom? Meet our reasoning models. These models take time to plan, analyze, and explain. For workers’ compensation, they represent a natural evolution toward precision and trust. Whether you are using them for reserve analysis, return-to-work design, or compliance interpretation, reasoning models can help you make better decisions faster, with greater transparency and consistency.

Your homework: test a reasoning model this week on one claim scenario. Ask it to “show its work,” evaluate the steps, and then compare its reasoning to your own. The more you experiment, the better you will understand where these tools shine, and where your human insight still leads the way.

Class dismissed.

History Class: Deep Research in Claims & Medical Management

The Trained A-Eye

Welcome back to class, friends! We have been sharpening our pencils, building our libraries, and experimenting in the lab. Now, it is time to head into History Class. History is where we dig deeper, uncover sources, and learn from the wisdom already written. In the world of AI, this lesson is about Deep Research, one of the newest and most powerful capabilities that turns curiosity into actionable insight. And just like in workers’ compensation, where we must often trace the story behind the injury or the evolution of a claim, Deep Research gives us the ability to go beyond the surface and understand the full context.

This project continues to be inspired by my professor friends Chris Snider and Christopher Porter, the Innovation Profs, who created the AI Summer School series. Their exploration of Deep Research reminded me so much of our industry. In workers’ compensation, we need to be asking better questions, following the threads, and seeing the bigger picture. Chris and Chris used the Deep Research tool to study how to grow their newsletter. This got me thinking this same approach could change how we research claims trends, evaluate medical data, or shape injury-prevention strategies. Their curiosity fueled innovation in communication, and now we are bringing that same spirit into the heart of workers’ compensation!

What Is Deep Research?

Deep Research is an agentic AI capability. This is a fancy way of saying it is a model that can take your question, search the web in multiple steps, read credible sources, and compile a comprehensive, citation-backed report. Instead of giving you a quick summary like a standard model, Deep Research goes out, reads what is actually out there, and then comes back with a full explanation complete with sources, links, and context. Think of this as the difference between asking a coworker a question and hiring a full research assistant who brings back the data, the references, and a neat executive summary.

In workers’ compensation, this can be a powerful tool. Imagine being able to ask, “What are the latest treatment success rates for lumbar strain in manufacturing employees?” or “What are the best practices for preventing delayed recovery?” and getting a six-page report summarizing the top medical journals, regulatory updates, and evidence-based approaches. You could have your own in-house research department without waiting three months for a white paper! (This gets exciting for some of us research nerds… yes, that’s me! J)

Why Deep Research Matters in Workers’ Comp

Our industry thrives on patterns, precedent, and proof. Whether analyzing loss runs, evaluating treatment guidelines, or developing safety protocols, we rely on data to make informed choices. Deep Research helps us find that data faster and interpret it more effectively. Instead of scrolling through outdated PDFs or trying to recall which state revised its telehealth reimbursement last quarter, you can ask Deep Research to find, verify, and summarize the information for you.

For example, an adjuster could use Deep Research to examine trends in delayed reporting or psychosocial barriers to recovery. A risk manager could use it to identify the latest workplace safety innovations in similar industries. A medical management professional could use it to compare surgical outcomes versus physical therapy recovery rates across patient populations. These insights allow us to make data-driven decisions, not gut guesses, and that precision strengthens both claims outcomes and communication.

Real-World Applications

Time to bring this into everyday practice. Picture a claims team preparing for an employer’s quarterly review. Instead of manually pulling reports, they ask Deep Research to explore “emerging musculoskeletal injury trends in light industrial workforces in 2024–2025.” The tool reads current studies, regulatory updates, and industry publications, then delivers a structured, sourced summary with recommendations. The team walks into the meeting prepared to discuss prevention strategies, predictive analytics, and training recommendations with confidence and authority.

Or think about a nurse case manager managing a high-cost chronic pain claim. By using Deep Research, they can pull evidence-based approaches for multidisciplinary pain management and identify which treatments have the strongest recovery outcomes. This helps guide provider conversations and supports justification for utilization review. When done well, it enhances both quality of care and cost control. Win:Win!

For employers, Deep Research can identify the top trends in employee engagement around safety and wellness programs. Want to know which communication strategies reduce incident rates or which ergonomic investments yield the best ROI? Deep Research can find the data and compile it into an actionable plan. When knowledge becomes accessible, strategy becomes intentional.

How Deep Research Works

Unlike regular AI models that respond instantly, Deep Research takes time. This model is actually thinking. When you activate the tool, it asks clarifying questions to refine the search, ensuring the results are relevant to your true intent. Once you answer those questions, it launches into multi-step analysis performing searches, reviewing articles, cross-checking facts, to build a structured report that references every source it uses. (Make sure to double check that the references are real as sometimes the models like to hallucinate sources!)

You could see updates like, “Researching peer-reviewed studies on return-to-work duration for shoulder injuries” or “Gathering data on state-level indemnity cost trends.” After several minutes, you receive a comprehensive, source-linked document that reads more like a professional briefing than an AI reply. In workers’ compensation this kind of accuracy is invaluable, especially in a space where every jurisdiction, regulation, and case type carries nuance.

Using Deep Research Wisely

With great power comes great responsibility (and yes, a little sparkle). Deep Research is only as strong as the instructions you give it. Prompting is important! The more context you include, the better your results. In workers’ compensation, this could include industry, claim type, audience, and intent. Start with clear prompts like: Research the impact of early intervention on workers’ compensation claim costs or summarize new state legislative trends affecting telehealth reimbursement in 2025. You could also ask for the AI Deep Research model to identify the top three evidence-based treatment strategies for repetitive strain injuries.

Once you receive the report, take time to review, verify, and synthesize. This tool provides information and this is where you provide wisdom. Use it to inform your expertise as a tool. Keep an eye on source credibility, especially when citing results for legal or medical decisions. Deep Research is your assistant. It is a tool to support.

How It Changes Learning and Leadership

The magic of Deep Research is that it teaches. The more you use it, the more you uncover credible publications, new best practices, and innovative programs across the country. It bridges the gap between theory and application, empowering claims leaders to stay ahead of change. For training, it can generate research packets for new adjusters or HR staff, accelerating their learning curve and reinforcing evidence-based thinking. For executives, it can summarize entire policy shifts or national trends in a single report.

When we combine our professional insight with AI’s ability to synthesize data, we are able to create a stronger, more informed workforce. Deep Research helps us shift from reactive to proactive, from catching up to leading forward. We need to be on the front end of claims, helping injured workers’ to take proactive claims management moving forward. In an industry that depends on trust, accuracy, and human care, this tool helps us make smarter decisions faster without losing the heart behind the work!

Class Takeaway

Deep Research is your all-access pass to smarter insights and stronger strategy. Deep Research curates information, connects it, and makes it useful. For workers’ compensation, it means fewer silos, better communication, and faster access to evidence that drives outcomes. The next time you are developing training, revising policy, or analyzing trends, let Deep Research be your partner in discovery.

Your homework: Ask Deep Research to explore a question you have always wanted better data for, something that would truly help your team. Read the report, highlight what stands out, and share one new insight at your next team meeting. Learning together is how we grow stronger. Learn and pass it on!

Class dismissed.

Civics Class: Ethics, Privacy, and Guardrails in AI for Workers’ Compensation

The Trained A-Eye

Welcome back to class, classmates! We are mid-way through the semester and at this point, we have handled math, lab work, and even a trip to the library. Now, time for Civics Class. Civics is where we talk about rules, responsibilities, and what it means to be a good digital citizen in this brave new world of AI. Just like in workers’ compensation, ethics is a foundational component into how we work. Ethics shapes trust. Privacy protects people. Guardrails ensure the work we do serves rather than harms. As AI becomes woven into the daily fabric of claims, communication, and care, it is our job to understand how to use it responsibly, transparently, and with integrity.

This project continues to be inspired by my professor friends Chris Snider and Christopher Porter, the Innovation Profs, who created the AI Summer School series. Their session on AI Ethics reminded me that every great system, whether human or machine, depends on quality values and clear boundaries. The Innovation Profs talk about AI’s “ethical cast of characters,” and I smiled because it feels a lot like a video game. (For me, old school Nintendo of the 1980s.) Each character has a challenge to defeat before advancing to the next level of responsible use. The framework is clear, clever, and what we need in the world of workers’ compensation. Thus, today I present you with a guide for navigating both the risks and the responsibilities that come with innovation.

Meet the Ethical Cast of Characters

Every hero needs to know their villains. When it comes to AI, we face a lineup of ethical challenges with each one requiring awareness, reflection, and action. Think of these as the “boss levels” of the workers’ compensation industry’s AI journey. Time to meet the cast.

The Hallucination Ghost

Hallucination is when AI confidently makes something up. It is the ghost that slips false facts into reports, misquotes laws, or invents data that sound believable but are not true. In workers’ compensation, that can lead to real consequences such as a misrepresented statute, an inaccurate claim summary, or an incorrect medical guideline. Oh, and we have seen this play out in some of our legal groups. The fix? Verification. Always confirm AI-generated content with primary sources, policy manuals, or credible data. If AI writes a letter, check it like you would check a new adjuster’s first draft. Trust is earned, not automated. And always fact check your sources, especially statutes and compliance-related information. Not doing this can hurt you, your organization, and the people you serve.

The Bias Monster

Next up is Bias. Bias is a sneaky monster that feeds on the data AI was trained on. Because datasets come from human writing, they can reflect human biases around race, gender, or occupation. In workers’ compensation, that might look like stereotypes about certain job types or assumptions about injury severity. The solution is to train AI better and audit the results. Ask, “Who benefits from this outcome?” and “What voice might be missing here?” Ethical use of AI means seeing bias before it becomes behavior. We all have bias. Being aware of it and then doing something about it help regulate our work.

The Copyright Keeper

Enter the guardian of Intellectual Property. AI learns from enormous amounts of existing content, which sometimes includes copyrighted material. For our industry, this means being cautious about how we use and distribute AI-generated materials. This becomes especially important in training, marketing, or consulting work. Always make sure content is original or appropriately cited. Give people credit where credit is due. Think of it as respecting your colleagues’ work the same way you would want your own reports or playbooks respected. In short: cite your sources and stay classy.

The Privacy Protector and Security Sentinel

These two travel together like a superhero duo: Privacy and Security. They guard the sensitive data we handle daily including but not limited to medical information, payroll details, employer records, and claim notes. AI tools can make processing faster, but only if we protect what matters most. Never input personally identifiable information or claim-specific details into a public model. Use secure, approved systems for analysis, and anonymize examples whenever possible. Data privacy is a promise to the people we serve. Flip the script. Would you want your personal data input into public AI? Probably not.

The Green Guardian

Meet Environmental Impact. The Green Guardian reminds us that technology, while immaterial is not invisible. Training large AI models takes massive energy and water resources. While that may feel far removed from workers’ compensation, this is a nudge to think sustainably about digital practices. Do we really need to regenerate that prompt five times? Are we saving outputs for reuse to minimize resource waste? Ethics includes stewardship, meaning that we all have a responsibility to take care of the environment while we innovate. Even small choices can ripple into big change.

The Workforce Transformer

Workforce Displacement is less a villain and more a complex character in this story. AI changes how we work in workers’ compensation, not necessarily eliminating people. This is where we must reframe the work, the purpose of what we do, and highlight the significance of human connection. As we look at work in workers’ compensation, some of these work tasks include automation handling repetitive documentation while humans focus on empathy, negotiation, and connection. Our challenge is to upskill, reskill, and empower our teams to use AI as a collaborator, a resource, and a tool. The most successful professionals will be those who learn to lead AI versus trying to compete with it. This transformation is an invitation to grow. Work smarter, not harder. And do it well.

The Misinformation Magician

Now comes Misinformation. This is the trickster of the bunch of our video game-based characters. This is the risk of using AI-generated content without fact-checking or of seeing false information circulate in claims communication or policy documents. For example, an AI summary might misinterpret new case law or outdated medical guidance. The antidote? Transparency. When you share AI-assisted work, disclose it. When you publish, verify it. Misinformation only wins when we fail to slow down and think critically.

The Harmful Content Hydra

Last, and certainly not least, is Harmful Content. This character is the many-headed hydra of the internet. AI models are trained on massive datasets and as a result, they can accidentally reproduce harmful or offensive material. This is especially critical when communicating with injured workers, where empathy and psychological safety matter. Always review tone and phrasing, especially when dealing with sensitive topics like pain, disability, or termination. Our words can either heal or harm. Choose them with heart and remember, these word choices ultimately reflect your character, personal brand, and impact your organization.

How to Stay Ethical in an AI World

How do we defeat this cast of characters? With knowledge, clarity, and accountability. Some challenges can be managed through vigilance and good human editing to ward off hallucination and bias. Others, like privacy, intellectual property, and misinformation, require organizational policies and sometimes even legal oversight. Ethics is a value and impacts a collective organizational culture. The key is to integrate ethics into every step of the process. Build privacy policies that cover AI use. Train employees on proper input boundaries. Establish internal review procedures for AI-generated communication. And most importantly, lead by example! If you are transparent, intentional, and kind in your use of these tools, your team will follow suit. Ethics begins with everyday choices, not policy manuals. Your employees and peers are watching. What is not addressed and brought to light affirms behavior.

Why This Matters in Workers’ Compensation

Workers’ compensation runs on trust between employers and employees, between claims professionals and injured workers, and between companies and their partners. Trust is built when our words match our values. Using AI responsibly ensures that we maintain that trust while embracing innovation. We can use these tools to improve efficiency and access, but the human element must always lead. We can approach AI through the lens of care by protecting privacy, honoring fairness, and maintaining transparency. When we do so, we strengthen the entire ecosystem. We prove that progress and ethics can coexist beautifully. In a space that touches people’s lives so deeply, this combination is essential.

Class Takeaway

AI ethics builds a world where innovation supports integrity and efficiency amplifies empathy. As we move forward, remember the ethical cast of characters, and keep your own guardrails strong. Lead with curiosity, protect with conscience, and communicate with compassion.

Your homework: Review one area of your work where you are already using or planning to use AI. Identify which ethical “character” might appear there: hallucination, bias, privacy, misinformation. Create a plan to address it. Responsibility starts with awareness and ends with action.

Class dismissed.

Economics Class: Implementing AI for Efficiency, Savings, and Smarter Spend

The Trained A-Eye

Welcome back to class, classmates! We studied ethics, reasoning, and deep research. Now I welcome you to Economics Class, where we talk about what everyone secretly loves to measure: time and money. In workers’ compensation, both are precious resources. We are always looking for ways to streamline processes, reduce costs, and improve outcomes. We don’t do this just for efficiency’s sake, we do this so we can spend more time where it matters most: helping people heal and thrive. This week, we are learning how to implement generative AI directly into our daily work for smarter spend, higher productivity, and more peace of mind.

This project continues to be inspired by my professor friends Chris Snider and Christopher Porter, the Innovation Profs, who created the AI Summer School series. Their lesson on implementing AI at work made me think about how perfectly this applies to workers’ compensation. Our world runs on repeatable processes from claims documentation, to compliance, from safety training, to reporting. What is great about these processes is that they are all ripe for automation when used thoughtfully. Chris and Chris focused on a tool called JobsGPT, which analyzes job roles and shows how AI can streamline daily tasks. I thought: what if we had the same concept for claims professionals, HR leaders, and risk managers? The result could change not only how we work, but how we feel at work.

Where to Begin: Breaking Down the Job

Start by brainstorming the tasks that fill your day. Claims professionals spend hours writing letters, summarizing medical notes, documenting conversations, and preparing reports. HR leaders write policies, update training manuals, and handle employee communication. Safety managers collect data, review incident reports, and conduct investigations. These are all areas where generative AI can help when used with structure and care. The first step is identifying which tasks are repetitive, time-consuming, or require the same pattern of logic each time. Those are your entry points. Once you list them, think about whether AI could assist with drafting, summarizing, analyzing, or communicating. Start small. Identify one task that drains time every week. Maybe summarizing telephone conversations, reviewing provider notes or incident reports, or writing return-to-work letter. Begin there. That is your opening door to efficiency.

Quick Wins: Do These Now

Quick wins are tasks AI can help with right away. These are low-risk, high-reward activities that do not touch private data or sensitive information. For example, you can use AI to draft first-pass content such as employee newsletters, safety bulletins, or training outlines. It can also be used to summarize lengthy meeting notes into clear action steps or help you reframe meetings that could have been an email. J AI can also help you brainstorm ideas for conference presentations, program names, or claims communication campaigns. These quick wins free up time immediately. They are your “instant ROI” projects that typically take thirty minutes but now take five. That time savings improves your calendar and your clarity. Instead of rushing from task to task, you can slow down long enough to think strategically about your team, your claims, or your client relationships. Efficiency creates space for humanity.

Definite Don’ts: Keep These Human

Not everything belongs in AI’s hands. Some tasks are too sensitive, nuanced, or personal to delegate. Anything involving confidential information including but not limited to claim details, medical records, or personal employee data should stay within your secure systems. Check your organizations policies on AI use. The same goes for decision-making around ethics, performance management, or return-to-work accommodations. Why? Tone and judgment are still human art forms. AI can draft, but it cannot discern emotion. AI does not sense when a worker is scared about surgery or when a supervisor feels frustrated but misunderstood. That is where your leadership and empathy shine. Think of AI as your assistant, not your replacement. You lead the conversation, and let AI take notes.

In-Between Cases: Test and See

The in-between category is where things get interesting. These are tasks that might benefit from AI, only after experimentation. This is your testing ground for growth. You might try using AI to generate first drafts of policy updates, training quizzes, or standard letters, then compare those to your current process. You could test how AI analyzes large amounts of data, like loss run summaries or survey responses. Run side-by-side trials. Compare quality, accuracy, and time savings. Some outputs will amaze you while others will need major revision. Experimentation is where the magic happens. Create your own AI playbook, the set of tools and prompts that make your work better, faster, and more enjoyable. Again, check your organization’s policies first!

The 70/20/10 Rule for Implementation

Once you start experimenting, use the 70/20/10 rule. Let AI handle 70% of the work such as the drafting, summarizing, or analysis. You take 20% to refine, edit, and ensure accuracy. Then use the final 10% to add your personal touch: empathy, tone, or strategy. This balance ensures that your communication stays human while your workload stays light. Imagine AI drafting a complex return-to-work plan. It structures the timeline, references modified duty tasks and writes the base letter. You then review, add empathy-driven phrasing, and ensure it aligns with your company’s voice. The end product? Professional, efficient, and heartfelt in less than half the time.

Why This Matters for Workers’ Compensation

Generative AI helps with precision, consistency, and better human outcomes. In our industry, speed is important and also, accuracy and tone are everything. Using AI strategically can reduce administrative friction and increase emotional bandwidth. Adjusters can spend more time talking to injured workers instead of typing. HR leaders can focus on culture instead of clerical work. Safety managers can prioritize prevention instead of paperwork. There is also a financial upside. Of course there is, this is an effective business strategy! When communication improves, litigation drops. When training is consistent, injury frequency declines. When return-to-work is personalized, claims close faster. Each of these results translates to measurable savings. Implementing AI thoughtfully can generate compassion-based ROI with a return on integrity as well as investment.

Building Your Implementation Plan

Where to begin? Start with small steps to implementing AI in your job:

Step 1: Map your tasks. List everything you do in a typical week, from claims documentation to internal communication.

Step 2: Label each as a Quick Win, Don’t, or Test & See. Be honest about what drains your time and what requires your expertise.

Step 3: Pilot one or two quick wins. Track time saved, quality of results, and your overall workload balance.

Step 4: Establish guardrails. Protect data privacy, maintain ethical standards, and clearly define what AI can and cannot do in your role. Double check your organizational specifications around AI.

Step 5: Share your results. Bring what you learn to your team or leadership. Show how AI can enhance the human side of work by working smarter, not harder.

Leadership Lessons from Implementation

Leaders set the tone for how technology is received. When you model responsible experimentation, you empower your team to do the same. Encourage questions, celebrate small wins, and share best prompts in your team’s prompt library. Remember that change fatigue is real, and we are changing at a rapid pace in our present environment. For some people, AI feels intimidating or even threatening. Approach those feelings with empathy and reassurance. Make it clear that the goal is enhancement, not replacement. When people feel safe to learn, they innovate faster. This is how we future proof the workforce with one conversation, one tool, one confident experiment at a time. Teach yourself something new, and then go share it with someone else.

Class Takeaway